Event Kit

Data Development

Data Development

Data development lets your workshop participants get a feeling for the importance of engaging with data, explore patterns and limitations of general-purpose models, and use those models to create data for more accurate, smaller, faster and fully private task-specific components that you can run yourself.

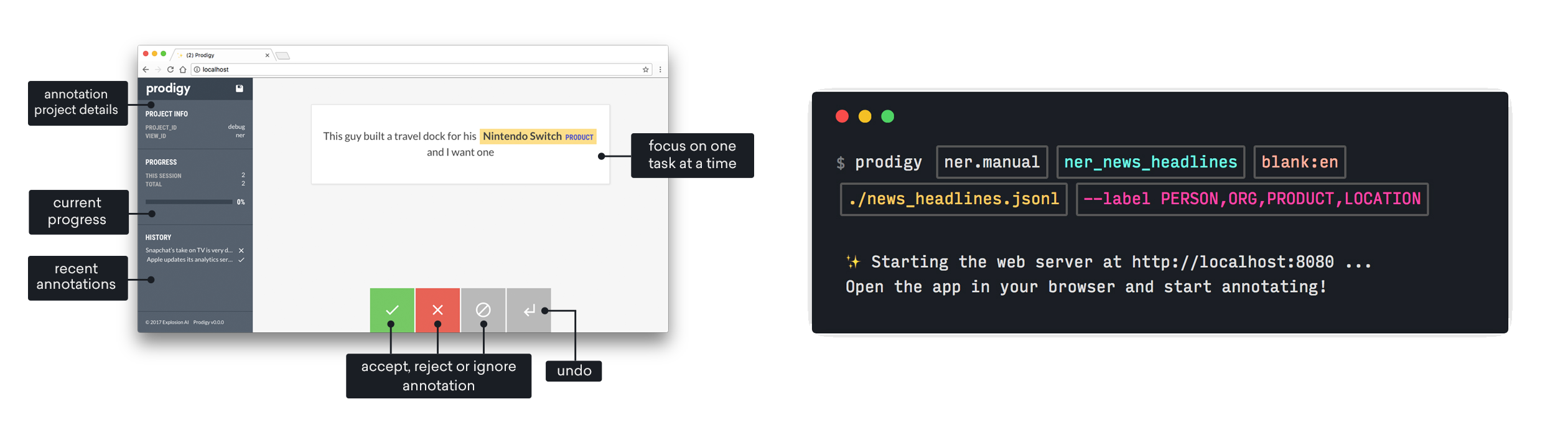

This kit includes workflows and tips for hosting a LAN Party on data development, annotation and data exploration. It can be applied to any data and topic you choose to work with and uses the Prodigy annotation tool, which is available for free for your party. The data and task you use with this kit should ideally focus on information extraction, i.e. categorizing text or extracting structured information from it.

Introduction to Prodigy

Prodigy is a modern annotation tool for creating data for machine learning models. You can also use it to help you inspect and clean your data, do error analysis and develop rule-based systems to use in combination with your statistical models. At your LAN Party, you can use Prodigy to collaboratively explore and analyze data, and create datasets for training or evaluating models.

Prodigy comes with an efficient modern web application and Python library, and includes a range of built-in workflows, also called “recipes”. It’s fully scriptable in Python and lets you implement your own custom recipes for for loading and pre-processing data and automating annotation. If you can do something in Python, you can use it with Prodigy!

Setup and installation

Prodigy ships as a Python library and can be installed via pip like any other package, with --extra-index-url specifying the download server and license key. We recommend using a fresh virtual environment to get started.

Get your Prodigy license key

Get your Prodigy license key

To install and use Prodigy, you will need a license key. We’re happy to support your LAN party with a free license for you and your participants – just email us and include the details of your event. Once you have your key, you can print out the instructions template and fill it in.

Install Prodigy

Once installed, the main way to interact with Prodigy is via the command line using the prodigy command, followed by the name of a recipe you want to run and optional settings. For example, to make sure everything it set up correctly and to view details about your installation, you can run the stats recipe:

prodigy statsAnnotation recipes will start the web server on http://localhost:8080. You can then open the app in your browser and begin annotating. The data is automatically saved to the database in the background and you can also save manually by clicking the save button or pressing cmd+s. Prodigy also supports other keyboard shortcuts for faster and more efficient annotation.

Data preparation

Prodigy can load in data in a variety of input formats, including plain text, JSON or CSV. An especially good option is JSONL, newline-delimited JSON: it gives you the flexibility of JSON, but can be read in line by line and allows faster loading times for large datasets.

{"text": "This is a text"}{"text": "This is another text"}Data exploration

If it’s your first time or you’re not sure how to get started, start with data exploration to get a feeling for the data. The mark recipe in Prodigy loads in your data and show it in one of the available interfaces, for example text.

View data

You can now move through the examples and click the green accept button or use the keyboard shortcut a to move on to the next example.

Ideas and activities

- Use the data exploration phase to plan out your annotation task and label scheme. For example, if you want to group the texts into categories, which category types make sense and reflect the reality of the data? As you continue exploring, the schema will likely change and evolve.

- Go through the data together in the group and talk about what you see and what stands out. If participans are exploring individually, take screenshots of any interesting or funny examples and edge cases you come across and discuss them in the group later.

- Use the accept (a) and reject (x) decisions to sort the examples into data to include and use further, and data to exclude from your experiments. When you export your annotations with

db-out, this decision will be available via the"answer"key, making it easy to filter your data.

Data annotation

Once you have decided on a label scheme, you can use Prodigy to create annotations on your data. For example, the ner.manual recipe starts the annotation server with one or more labels and lets you highlight spans in the text. Prodigy also supports using match patterns or an existing pretrained model or general-purpose LLM to pre-annotate examples for you.

Named entity recognition

After saving your annotations, you can run db-out to export your annotated data to a JSONL (newline-delimited JSON) file in Prodigy’s format. You can also use the train recipe to train a spaCy model component from your collected annotations.

Export data

Hardware requirements

Hardware requirements

Data annotation will work fine on your local machine, but for training, it’s helpful to have a GPU available, especially if you’re looking to train models using transformer embeddings. If you don’t have access to a GPU, you can still run training experiments – it might just be slower or you’ll have to use a non-transformer config, which can lead to lower accuracies.

Ideas and activities

- Have all participants log the time spent annotating data. This lets you calculate the person hours (cumulative time it would have taken a single person) later on. You may find that it takes surprisingly few person hours to create a high-quality dataset that’s large enough to train a task-specific model.

- Take screenshots of any interesting or funny examples and edge cases you come across during annotation. You can later present and discuss them in the group.

- If you’re unsure about an annotation decision, use the ignore button or space to skip the example. It’s often not worth it to dwell on a single decision for too long and more important to stay in the flow.

- If multiple participants are annotating, have a portion of the data annotated by everyone. Using Prodigy’s

reviewrecipe and interface, you can then compare their decisions on the same examples and discuss disagreements with the group (also see the discussion prompts for more ideas). - Break up your text into smaller chunks and separate the task into multiple steps wherever possible. This greatly reduces the cognitive load on the annotator (humans have a cache, too!) and makes the process more efficient and less error-prone. Multiple passes over the data may sound like more work, but can actually be more than 10× faster overall!

- If you’re annotating names, concepts and ideas, analyze how mentions change over time. For example, which topics are mentioned the most at a given point? How does it change? You can also visualize your results as a bar chart race. This tutorial includes an example. You can work directly from your annotations, or train a model to automatically process larger volumes of data.

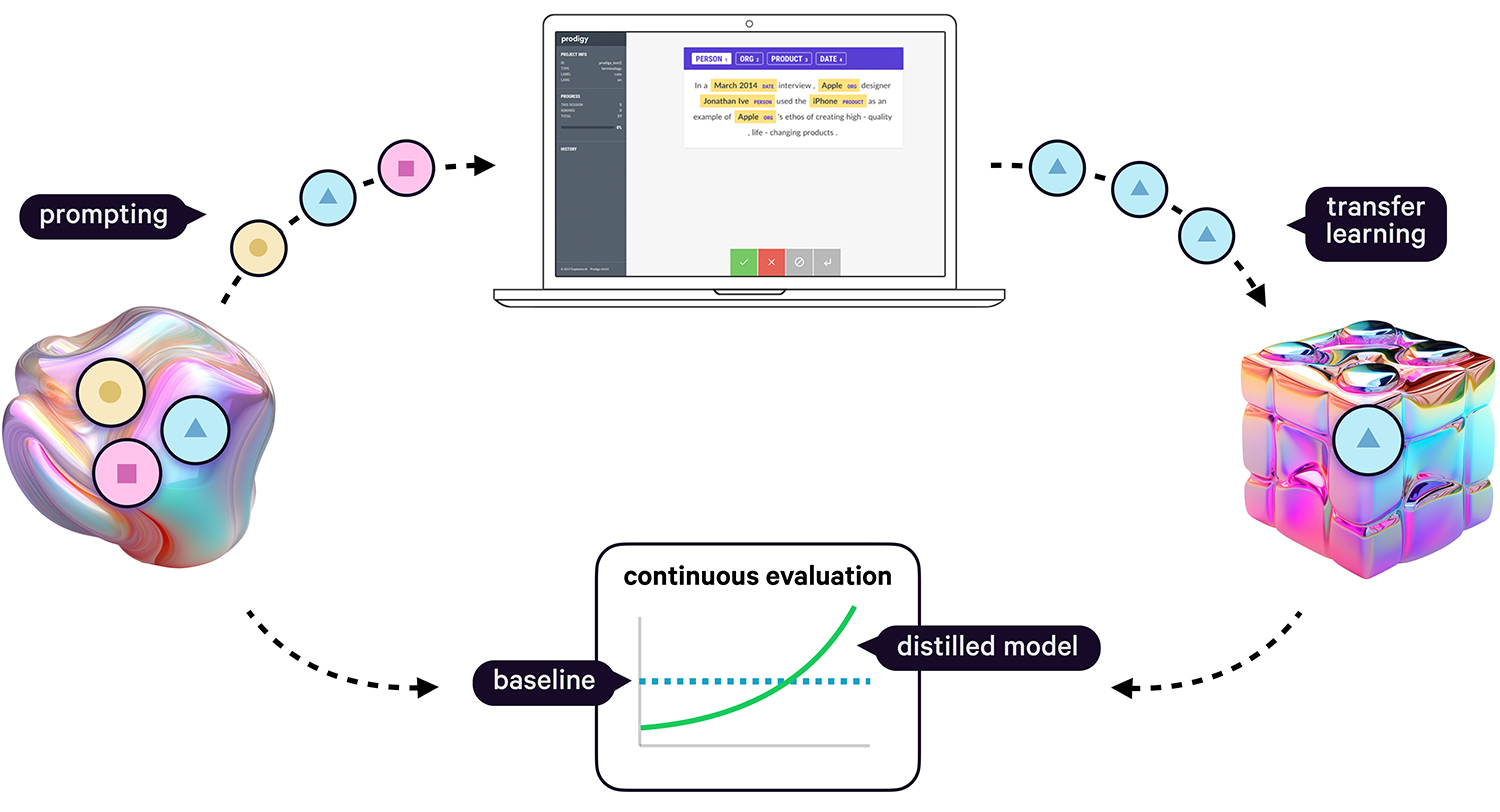

Human-in-the-loop distillation

One of the most powerful data development use cases is distilling larger general-purpose LLMs into smaller, task-specific components. You can do this by using the LLM to pre-annotate and help you create data faster, and then train a small model on the created annotations.

In this workflow, the LLM is only used during development, which is not only more cost-effective, but also ensures no real-world runtime data has to be sent to external model APIs or slow generative models. In production, the system only uses the distilled task-specific component that’s more accurate, faster and fully private.

Ideas and activities

- Combine annotations by all participants and train a task-specific model on them. Don’t forget to hold back a sample of the data for evaluation. (If you’re not sharing a database, you can use

db-into import annotations.) Compare the accuracy, speed and model sizes you can achieve with different training configurations. - Run the

train-curvecommand to see how accuracy improves (and will likely improve further) with more data by training on different portions of the data. Setting--show-plotwill render a visual plot of the curve in your terminal. As a rule of thumb, if accuracy improves within the last 25%, training with more examples will likely result in better accuracy. - Use a general-purpose LLM to label the data for you (e.g. with

ner.llm.fetch) and evaluate its accuracy on a test set of your manually created and corrected annotations. This is the baseline you’re looking to beat with your task-specific model. - Recreate the experiment from the PyData NYC workshop where we used a general-purpose LLM to help annotate data collaboratively with the participants, corrected the annotations and trained a 20× faster model that beat the LLM baseline.

Discussion prompts

- What are the most common mistakes the model made during pre-annotation? Did you come across any patterns? What can this tell us about the model and its original training data?

- Which interesting edge cases did you find while exploring the data? Where did the label scheme not match the reality of the examples? What potential impacts could this have on the model? And can the label scheme be adjusted?

- If examples were annotated by multiple participants, where did they disagree and why? Is there a correct answer or is the decision entirely subjective? What does this mean for the label scheme and model?

- Were you able to beat the LLM baseline with annotated data? How does your task-specific model compare in terms of accuracy, speed and model size? What could this mean for data development workflows in practice and use cases that prioritize speed and/or data privacy?